History Of Concurrent Engineering

The research explores the rationale of applying Concurrent Engineering (CE) philosophy to the. 2.3.2 Historical background of UK construction process.

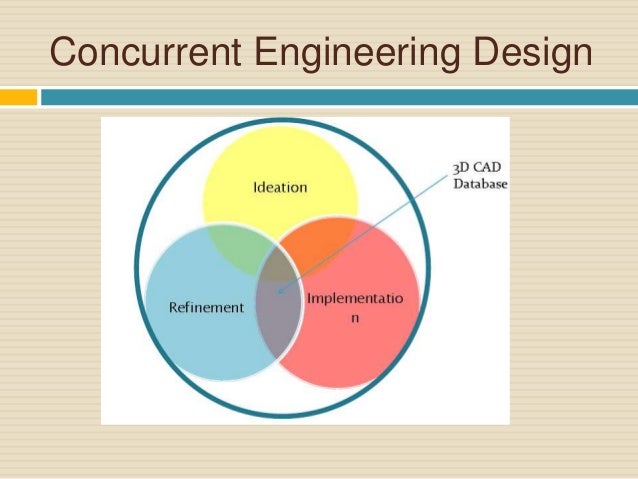

Concurrent engineering is a method used in product development. It is different than the traditional product development approach in that it employs simultaneous, rather than sequential, processes. By completing tasks in parallel, product development can be accomplished more efficiently and at a substantial cost savings.

Rather than completing all physical manufacturing of a prototype prior to performing any testing, concurrent engineering allows for design and analysis to occur at the same time, and multiple times, prior to actual deployment. This multidisciplinary approach emphasizes teamwork through the use of cross-functional teams, and it allows for employees to work collaboratively on all aspects of a project from start to finish.

Also known as the iterative development method, concurrent engineering requires continual review of a team’s progress and frequent revision of project plans. The rationale behind this creative, forward-looking approach is that the earlier that errors can be discovered, the easier and less costly they are to correct. People who use thi smethod claim that it offers several benefits, including increased product quality for the end user, faster product development times, and lower costs for both the manufacturer and the consumer.

< Concurrent EngineeringThroughout the design of a part or system of parts, there is a process that engineers will follow. Depending on what they are designing and what the concentration is on, the specific processes that they go through can be vastly different. This section attempts to capture many different concepts of the design process and put them in one place.

Although there are many differences between some design processes, here is a brief overview of what should happen:The first step in the design process is to define the design. This means writing down everything that you are working towards and coming up with a brief, dense summary of what the design is. Normally, a customer has to express a need in order for a product to be designed. Communication with the customer can come directly, from marketing research, or some other source. It can be beneficial to both the customer and the engineer if a direct line of communication is set up. Once customer requirements are laid out, then they are translated into engineering requirements. These requirements are then used to come up with initial concept ideas. Usually many concepts are conceived (sometimes hundreds or even thousands), but are then narrowed down based on which designs are the most feasible. A few concepts are then chosen to be prototyped and tested. Based on testing they are improved and a final design is chosen. Once a design is chosen, manufacturing can begin and the customer receives the finished product.

- 1Design Processes

- 1.2Examples of Design Processes

- 1.4Concurrent Design Tools

- 1.4.1System Design with SysML

- 1.4.3ARL Trade Space Visualizer (ATSV)

- 4DFX (Design for X)

- 4.1Design For Manufacturing

- 6Failure Modes and Effects Analysis

- 8Risk and Uncertainty Management

- 8.3Uncertainty Management

Design Processes[edit]

(Chris Fagan)

The design process for concurrent engineering can vary quite a bit depending on the size and nature of the project. However, most approaches follow a similar structure outlined below:

- Define customer requirements

- Define engineering requirements

- Conceive design solutions

- Customer Requirements

- Come up with multiple designs/ideas

- Approval

- Funding

- Happens throughout process

- Develop prototypes

- Develop/optimize few ideas from original concepts

- Approval

- Implement design

- recheck

Interview with a Designer[edit]

by Chris Cookston

Examples of Design Processes[edit]

(Chris Fagan)

- Design Processes that have a history of success

- Focusing on processes that have been shown to work

- Difference between singular produced parts and mass produced

- Automotive vs. Aeronautics vs. NASA JPL for example

SMAD[edit]

Space Mission Analysis and DesignBy James R. Wertz and Wiley J. Larson

This is a book that is a great basis for space mission design, but also for Complex System Design.

Toyota[edit]

Example Of Concurrent Engineering

(Karl Jensen)(Adam Aschenbach)

Toyota is listed specifically in this wikibook because Toyota has been an originator of many design techniques that are in use today.

One such example is lean manufacturing. Lean manufacturing is the idea of getting more value with less work, and was derived from the Toyota Production System (TPS). More information can be found in the lean manufacturing wiki:

A few things that set Toyota apart from other manufacturers include:

• Toyota considers a broader range of possible designs and delays certain decisions longer than other automotive companies do, yet has what may be the fastest and most efficient vehicle development cycles in the industry.

• Set based concurrent engineering begins by broadly considering sets of possible solutions and gradually narrowing the set of possibilities to converge on a final solution.

• This makes finding better or the best solutions more likely.

• The above figure depicts the way that most American car companies went about design. The figure represents a point based serial engineering approach with quickly decides on one solution that will work. Once this solution is found the solution is optimized to find a better solution but not necessarily the best solution.

• The above figure depicts the way that Toyota designs it’s products. The process described shows a set based concurrent engineering approach. This approach involves creating a large pool of ideas that groups can communicate about. This large group of ideas is eventually narrowed down to the final solution which is usually the best solution. Using this system of concurrent engineering allows a robust solution to be found without much need for optimization. Instead of optimizing a design many designs may be prototyped and evaluated to determine which is best.[1]

Design Under changing requirements[edit]

(Chris Fagan)

It has been estimated that 35 percent of product development delays are a direct result ofchanges to the product definitions throughout the design process.[2] With numerous groups working on the same project the requirements can continually change. Steps toward a design process that would facilitate change was outlined in 6 steps.[3] Design when the systemrequirements are changing are quoted below.[3]

1. Establish and foster open communication between customers and design engineers.This includes communication within a design team.

2. Develop and explicitly write down design requirements as soon as possible. It isimportant to identify requirements for component interfaces and other possibleunspoken product specifications. Analyze the list for completeness and to seek outmissing requirements.

3. Examine the list of requirements to identify which requirements are likely to changeand which are stable. In the early stages of design spend more time on the enduringcomponents.

4. Predict future customer needs and requirement changes. Make allowances forchanges and create flexibility in components to accommodate future changes.

5. Use an iterative approach to product development. Quick turnover of designs andprototypes provides a method for testing requirements and discovering unanticipatedrequirements.

6. Build flexibility into a design by selecting product architectures that tolerate changingrequirements. This can be achieved by over-designing components to meet futureneeds, particularly in components that are likely to change.These steps have been established during the ongoing design of the Bug ID project and havebeen helpful in preventing lost time due to design changes. However, the system was notlooked at as a complex system.

Concurrent Design Tools[edit]

(Chris Fagan)

Concurrent engineering projects bring many disciplines together for a single design process. Tools have been developed to help designers with different knowledge and background work together on single designs.

System Design with SysML[edit]

(Chris Fagan)

SysML is a graphical language that uses a modeler to aid in the creation of diagrams. The basic idea of SysML is to create diagrams that capture information and actions of a system. SysML thenlinks them together allowing the model information to be used to help “specify, design,analyze and verify systems”.[4] Each of the nine diagrams in SysML represents a specificpart of the system. The diagram types in SysML are outlined in the paragraphs below:[4]

1. Requirement Diagrams represent the requirements and their interactions in thesystem.

2. Activity Diagrams illustrate the behavior of the system, which is dependent on theinputs and outputs of the system.

3. Sequence Diagrams also portray behavior of the system, but this time in terms ofmessages between parts.

4. State Machine Diagrams represent behavior when there are transitions betweenentities.

5. Use Case Diagrams render system functionality in terms of external actors. Theexternal actors use the entities in the diagram to complete specific goals.

6. Block Definition Diagrams represent structural components, composition andclassification of the system.

7. Internal Block Diagrams shows the different blocks of the systems and the connectionsbetween the blocks.

8. Parametric Diagrams contain property constraints, such as equations, that aidengineering analysis.

9. Package Diagrams shows how the model is organized. It uses packages and relatesthem together to represent the model.

Together these linked diagrams model the entire system containing elements within thephysical environment such as people, facilities, hardware, software, and data.[5] Thediagrams are implemented across disciplines to show the flow of data through thedesign.

Limitations and Benefits of SysML[edit]

(Farzaneh Farhangmehr)

For an efficient system modeling, the system languages should be unified. To aim this goal, Unified Modeling Language (UML)presents a standard way to write system models including conceptual things (for example system functions, software components etc.); However it has some limitations, for example because of its software bias, UML can not describe relationships between complex system composed of both hardware and software. The Systems Modeling Language (SysML) is an extension of UML structures by several improvements tending to address these limitations. It attempts to provide a modeling language for complex systems that also include non-software components (i.e. hardware, information, etc.). Based on the general definition: “SysML is a general-purpose graphical modeling language for specifying, analyzing, designing and verifying complex systems hat may include hardware, software, information, personnel, procedures, and facilities.” [6]

SysML presents a general purpose modeling language for complex systems applications. It can cover complex systems with a broad range of diverse domains (especially hardware and software) by facilitating the integration between systems and software. It allows the design team to determine how the system interacts with its environments in addition to understand how different parts of the system interact with each others. In addition, since SysML is a smaller language rather than UML (because it reduces software-centric limitations of UML), SysML is easier to understand, more flexible and easier to be expressed. Furthermore, its requirement diagram provides a technique for requirement management. Finally, since SysML diagrams describe allocations between behavior, structure and constraints (In general its allocation of function to form especially deployment of software on a hardware platform [7]), it can reduce the cost of the design.

Trade Studies[edit]

(Chris Fagan)

Any complex design task will require some trade off of performance, cost or risk. The resultsof these trade offs can be seen in a trade study, which are used to find configurations thatbest meet the requirements.[8] These trade studies often have a large amount ofuncertainty because of the limited knowledge of the design when the study is produced.Bayesian models can be used to support these trade studies and to show the probability ofcertain events.

A Bayesian model has three elements:[9]

1. A set of beliefs about the world Jodha akbar songs mp3.

2. A set of decision alternatives

3. A preference over the possible outcomes of action

Although these Bayesian models may contain information that is inconsistent or incomplete,they are especially good at situations that are characterized by uncertainty and risk. In thesecases Bayesian models will suggest the best choice to pursue given the model statement.[9]This suggestion comes from modeling the decision making during the design process. Thereis a significant advantage in the design process just by structuring the problem. The Bayesianmodel provides analytical support to the decision making process.[9]Bayesian models can address:[8]

- Targeting uncertainty

- Evaluating uncertainty

- Importance of uncertainty

- Mix of qualitative and quantitative criteria

- Fusion of multiple team member evaluations

- Determining what to do next to ensure the best possible decision is being made

Overall, applying Bayesian models to trade studies clarifies decisions and provides direction.

ARL Trade Space Visualizer (ATSV)[edit]

(Farzaneh Farhangmehr)

In the early stages of design, one of the main goals of decision makers is to generate trade studies of possible design options that can meet design requirements and select the best one. In traditional multi-objective optimization techniques, decision-makers have to quantify their preferences a priori. To aim this goal, a Pareto Frontier of non-dominated solutions has been defined so that decision makers can evaluate preferences. However, sometimes decision makers cannot successfully determine their preferences a priori. To address this problem, ARL Trade Space Visualizer (ATSV) [10] has been developed to allow exploration of a trade space. The goal of ATSV is generally to provide a technique for populating a multi-objective trade space using differential evolution [11] based on the reduced (in aspect of dimension) subset of the objectives. As a result, instead of a single solution, ATSV provides unconstrained dimensions and it allows decision makers to analyze interactions of design variables.

Limitations and Benefits of ARL Trade Space Visualizer (ATSV)[edit]

Design by shopping which was introduced by Rick Balling [12] in 1998 enables a posteriori articulation of preferences by allowing decision makers to view a variety of feasible designs and then selecting a preference after visualizing the trade space for an optimal design based on this preference. ATSV by multi-dimensional data visualization (Glyph plots, Histogram plots, Parallel coordinates, Scatter matrices, Brushing, Linked views) displays multiple plots simultaneously and interactively applies preferences. So, instead of a single solution, ATSV provides unconstrained dimensions and it allows decision makers to analyze interactions of design variables. In addition, this exploration provides additional information about other areas of the trade space which may affect decision makers’ preferences. Furthermore, the automation used in ATSV brings an implementation to shopping paradigm by analyzing a large number of designs in a short period of time. In spite of these benefits, as a new technique, ATSV needs to be matured in some areas. One of this area is to develope faster graphical interfaces; understand the impact of problem size and complexity on user performance and limit the size of the problem based on results of theses findings.[13] Furthermore, future works should be done to ptovide ATSV with techniques of group decision makings. Finally, like most of available design methods, ATSV fails with respect to provide techniques of visualizing risk and uncertainties associated with systems.

Collaborative Engineering[edit]

(Chris Fagan)

Intelligent Negotiation Mechanism states that because different disciplines have differentgoals and knowledge, it is unavoidable that conflict will happen in the design process. Manymultidisciplinary design projects require engineers to work on designs simultaneously, whichcan lead to confusion due to gaps in communication among engineers. Thus, it is important todesign a work flow to keep the design process from being delayed.[14]

Concurrent Engineering Pdf

Efforts have been made to find solutions to this issue and make concurrent engineering moreeffective. Similar to shopping for a design in ATSV, it was found that a visual representationfor the management of the project could be beneficial. This first culminated in Gantt charts; abasic planning tool. These Gantt charts were only able to display information inputted from auser. As a result, this output was only as good as the information put in and if there werechanges midstream the program may or may not be able to accurately predict a change.[14]

There still was a desire for a program that could bridge the gap between engineering designand project management. Concurrent Simultaneous Engineering Resource View (ConSERV)is a knowledge-based project and was built with the idea that there is a relationship betweendesign and project management. ConSERV's aim is to provide a visual representation ofengineering design activities being done concurrently.[15] Figure 4 shows a graphicalrepresentation of this idea.[15]

The ConSERV concept essentially applies a project management approach to the designprocess. The ConSERV software serves as a decision support system, providing schedulereminders and keeping track of progress. This allows the project manager to oversee allaspects of a multidisciplinary project with ease.The ConSERV methodology can be implemented in 5 stages:[15]

1. Identify the project and parameters

2. Identify the main risk elements

3. Identify the most appropriate management tools

4. Establish the team and the project execution plan

5. Apply the ConSERV concept

Although ConSERV is an entire software package that applies all these design strategiessimultaneously, the concepts can be applied with traditional project management softwareand organization.

Product Data Management[edit]

Product data management or PDM is a very important part of any design. PDM is a software of some type that controls and tracks data for different designs. This can save time in money in companies where data can sometimes be lost when no PDM is used. One such software tool is Team Center. Team Center allows people from all of the world to be working on one system design while keeping all the data on a central server. It also acts as a overseer for sensitive files such as CAD files, allowing only certain users to modify the part and preventing any lost data.

More on PDM can be read at the wiki here: PDM Wiki

Geometric Dimensioning & Tolerancing (GD&T)[edit]

Geometric Dimensioning and Tolerancing, or 'GD&T', is a symbolic language applied to mechanical part drawings (2D drawings or annotated 3D CAD bodies), to specify the allowable imperfection of part features. ASME Y14.5 is a standard that defines GD&T from a design function point of view. ISO 1101, plus over 20 other ISO standards, form a rough equivalent to ASME Y14.5, but more from a dimensional metrology point of view. GD&T is defined by design, then used within design, and in manufacturing, procurement, and inspection. It is a language that enables unambiguous communication of geometric imperfection specifications and measurement data for mechanical parts and assemblies.

GD&T methods provide control of the form, size, orientation and location of features. Within GD&T are also concepts that enable the establishment of meaningful and repeatable frames of reference, called 'Datum Reference Frames' that are used to relate the tolerance zones for orientation or location controls in a functionally meaningful way.

The 'Datum Features' that are identified as part of the process for establishing Datum Reference Frames are generally the features that mate with other parts in an assembly. By dealing with the identification and further specification of datum features, a designer's attention is drawn to those mating features. This focus and awareness make the achievement of simple and effective mating part interfaces, that clearly and effectively constrain the rotational and translational degrees of freedom of a part within an assembly, more likely than if GD&T is not used.

The alternative to using GD&T is to attempt to specify the geometric imperfection of features with the only tool being directly toleranced dimensions. Ambiguity will be encountered when attempting to control orientation or location of feaures with directly toleranced dimensions. Ambiguity will also be encountered regarding definition of directly toleranced size dimensions. ASME Y14.5 provides requirements for size tolerances that do not exist if GD&T is not used. GD&T has been developed to solve problems encountered in the past due to those ambiguities.

A mechanical design is not complete until achievable and unambiguous specification of geometric imperfection of part features has been developed and applied to the drawings (2D or 3D) that define the part(s) in the assembly. Lacking complete specification may lead to subsequent design changes that will be needed to accommodate the imperfection associated with physical parts.

GD&T is often misunderstood to be a drafting or manufacturing subject. GD&T specifications and the associated 'Tolerance Stack-up' analysis which requires GD&T for an unambiguous basis, are primarily design subjects. Tolerance analysis methods and also dimensional metrology methods can become quite involved for critical or complex features. GD&T is a fundamental method for all mechanical design engineers that is needed to enable effective communication of part geometry specifications.

While GD&T can be applied fully and separately for all controllable characteristics aof all part features, it is common in industry to use GD&T as a risk management tool. A general 'Profile of a Surface' tolerance is applied via a general note on the drawing, then the most critical features receive specifically applied tolerances in the field of the drawing. This method enables more focus upon the most critical functional concerns. Similar risk management cannot be achieved with only directly toleranced dimensions, due to the great ambiguity that would be encountered.

DFX (Design for X)[edit]

DFX is the process of designing of a specific trait. This can include design for manufacturing, safety, performance, marketing, environment, cost and flexibility.

Design For Manufacturing[edit]

(Adam Aschenbach)

What is it?[edit]

Designing for manufacturability is a principle that engineers use to design parts that can be easily manufactured. DFM is usually a principle that concentrates on reducing the cost of part production. Designing for manufacturability should start at the beginning of product development and should be improved upon throughout the design process. Engineering should work with manufacturing and other functional groups to design parts that can be easily manufactured.

Why use it?[edit]

By thinking about the manufacturability of parts from the beginning of a project, engineers and manufacturing can work together to create cost effective parts that satisfy both groups. Parts that are designed for manufacturability and assembly generally cost less to produce than parts that are not designed with these considerations. Since cost reduction is one of the main goals of DFM, DFM is a principle known and used at most companies.

DFM Guidelines and Common Practices[edit]

Examples of Designing for Manufacturability[edit]

•Using the same screws throughout a product to reduce the number of tools on the assembly line, the number of tool changes when machining, and the number of different screws that are held in inventory

•Creating access holes for assembly workers that allow for easy installation of screws in tight spaces

•Reducing the number of parts within an assembly so there is less time spent assembling

•Leaving enough space between parts to allow for tool clearance

Design for Safety[edit]

(Adam Aschenbach)

Designing for safety is used when the safety of the people who use and are around the parts is most important. Designing spent nuclear fuel transport vessels is a perfect example of designing for safety. The transport vessels for spent nuclear fuel have to be extremely rigid and have to be able to take a massive amount of punishment. For example the vessel has to be able to withstand a 40” drop onto a 6” diameter steel spike. The vessel must also be able to withstand a drop test from 30’ onto an unyielding surface. Both of these tests are to show the safety of the transport vessel. In general, designing for safety does not consider cost as one of the design parameters because it often costs a lot of money to make a part or system safe.

Design for Performance[edit]

(Karl Jensen)

Design for Performance is a specialized area. There are many aspects of DFP that are different from other areas of design. In this case, the design is all about getting the most performance out of the product as possible. Oftentimes, little care is given to cost and manufacturability. Some examples of where this applies is the racing industry (automotive and otherwise), space missions, and some military projects (such as fighter jets). In order to get the best performance out of a design it is important to model and simulate the product before it is built. The modeling and simulation can be a complex process, but can save time and money later in the design process, as well as produce the best performance out of the design. Through simulation combined with DoE, trends can be found between design variables that can be used to achieve maximum performance. Complex system design is one idea that can be applied well to DFP. By looking at the product as a whole, complex interactions between large systems can be found.

(Blake Giles)

The following diagram depicts a typical design process geared toward the design of high performance complex systems such as airplanes and space vehicles.

Step 1: Define the overall goal of the mission and its objectives through qualification. This statement should be referred to throughout the design cycle to ensure that the mission needs are being met.[16]

Step 2: Quantify how well the mission objectives should be met to allow for success. These should be high level performance metrics of system attributes. For example, in the design of an automobile, these metrics would be acceleration, cornering, fuel economy, etc. These metrics are certainly subject to change throughout the design cycle and should be revisited.

Step 3: Define and characterize concepts that will meet mission objectives. This brainstorming activity should enumerate the possibilities of several different concepts that could potentially lead to mission success.

Step 4: Define alternate mission elements or mission architectures to meet the requirements of the mission concept. Architectures are the high level descriptions of the physical systems and sub-systems that carry out functions to accomplish the mission concepts.

Step 5: Identify the principle cost and performance drivers. These are the architectures which have a relatively high impact on system cost and performance. By identifying these drivers early will allow the design team to balance performance and cost.

Step 6: Characterization of mission architectures means to define the sub-systems of the vehicle including weight, power, and cost. Here mathematical models can be applied to describe sub-system performance.

Step 7: Evaluate quantitative requirements and identify critical requirements Insidious chapter 3 torrent.

Step 8: Quantify how well requirements and broad objectives are being met relative to cost and architectural choices.

Step 9: A baseline design is a single consistent definition of the system which meets most or all of the mission objectives. A consistent system definition is a single set of values for all of the system parameters which fit together.

Step 10: Translate broad objectives and constraints into well-defined system requirements.

Step 11: Translate system requirements into component level requirements.

Design for Marketing[edit]

(Adam Aschenbach)

Design for marketing is a way to design parts and systems so that they will be marketable. Some products may have great functionality but they may never sell in large quantities because they are not marketable. An example of design for marketing was presented in class. When Professor Burke worked at HP, marketing came to the engineers and said that they wanted the print cartridges to fit into beveled card board boxes. Although these boxes may have been slightly harder to produce and assemble they were used as a way to differentiate HP print cartridges from all their competitors. This made it easy for customers to tell which cartridges they needed for their HP printers and the boxes had more appeal due to their unique shape.

PPP[edit]

3P is a product and process design tool or methodology. The three P's represent product, preparation, process. Derived from lean manufacturing, 3P is a 'Design For Manufacturability' approach in which large consideration of the product or process design is targeted at reducing waste in the manufacturing process. Where continual improvement, also derived from lean, aims to iteratively improve the manufacturing process in small low impact increments over time, 3P allows engineers to redesign a process from scratch and seek better performing solutions. The type of overhaul is higher performing but requires more resources and capital.

The goal of a 3P team is to meet customer requirements while using the least amount of resources and realizing a quick time to implementation. A cross-functional team is selected to represent multiple aspects of the product or design. In a multi-day process, the team will review customer requirements and brainstorm several solution possibilities. These solutions are evaluated for merit and three are selected for prototyping. Physical representations are built to better understand the qualifications of the solutions, finally one is selected for implementation.

Failure Modes and Effects Analysis[edit]

(Adam Aschenbach)

What is it?[edit]

FMEA is a tool that can be used to determine possible failure modes for a product and the severity of those failures. Usually a list of parts within a system is created and then failure modes for each part or sub assembly are found through group brainstorming. Once a list of failure modes is determined, each failure mode is given a score for severity (S), occurrence (O), and detection (D). Once these numbers are determined (based on a scale of 1 to 10), the risk priority can be found. Below is a site that gives one description of what the ratings 1-10 may pertain to for severity, occurrence, and detection.

The risk priority is found by multiplying S x O x D = Risk. In general, a higher risk number means that the specified failure mode should have a higher design priority than a failure mode with a lower risk number. At the end of an FMEA meeting, tasks are usually assigned to people that are present. These tasks pertain to unknowns discovered while participating in the FMEA. By assigning tasks to specific people there is a higher probability that the tasks will be completed and that the document will be updated with correct information.

Is FMEA a concurrent engineering tool?[edit]

The people involved in FMEA sessions usually come from many different disciplines. There are usually people present from engineering, customer service, marketing, manufacturing, as well as managers. All of these people have to work together to determine possible failure modes for parts. These people also need to determine the scores associated with each failure mode. People from customer service may have knowledge about past products and can use their knowledge to give scores for occurrence. Someone from customer service may also be able to brainstorm possible failure modes for parts similar to ones used in the past. People from manufacturing may be able to provide information about how detectable a certain failure mode is because they have worked on the assembly lines or have experience in failure detection. By bringing in a diverse group of people together early in the design phases, more problems can be identified and properly scored.

Why use it?[edit]

Many companies from small engineering firms to large organizations such as NASA use FMEA as a tool to minimize some of the risks associated with introducing a new product. FMEA can be used as a design tool to help prevent failures and improve product robustness. A well written FMEA document can also be used for reference when designing new products.

When should it be used?[edit]

An FMEA document should be started at the onset of a project. The document should be updated any time there is a significant revision to the design, if new regulations are implemented, if customer feedback indicates a problem, and if there are any changes made to the operating conditions.

Advantages[edit]

•If possible failure modes can be realized and eliminated early in the design process, the resulting product will be more robust and fewer changes will need to be made after the product is released.

•Cooperating with different functional groups can bring up failure modes that engineering alone may not have seen.

•Creates a document that can be referenced for future projects that may use the same parts or practices.

Limitations[edit]

•It is unlikely that all of the failure modes will be realized even if significant time is spent in FMEA meetings.

•FMEA is dependent on the knowledge of those involved in the process. If the people involved do not have experience with past products or previous failure modes, the document may not be as strong as it could be.

•Risk numbers may be misleading and ultimately judgment must be used to determine what the highest risk failure modes are.

Collaborative decision making within optimization domain[edit]

(Farzaneh Farhangmehr)

Decision making during the design process has three main steps: options identification; expectation determination of each option and finally expression of values. Following the complexity of multidisciplinary systems, the design process of such systems is mostly based on concurrent design team. For sure, collaborative decision making has its own challenges. The collaborative optimization strategy was first proposed in 1994 by Balling and Sobieszczanski-Sobieski [17] and Kroo et al..[18] Two years later, in 1996, Renaud and Tappeta [19] extended it for multi-objective optimization for non-hierarchic decisions. In recent years many researches have been conducted to address challenges of Collaborative Decision-Based Design for eliminating communications barrios of design team during design lifecycle. Agent-based decision network,[20] Multi-Agent architecture for collaboration [21] and decision-based design framework for collaborative optimization [22][23] are examples of these approaches. (Also see decision-based software development: design and maintenance by Chris wild et al..[24] In spite of differences, all these methods should be able to meet requirements of making decisions by considering these facts:

- decisions might have different sources and disciplines;

- they might be in conflict with each others due to different criteria;

- decision makers might be individuals or groups;

- decisions might be made sequentially or concurrently;

- designers make decisions based on personal experiences

- information might be uncertain and fuzzy.

Decision making in multidisciplinary complex systems is to select options that maximize the objective function while optimization methods (as automated decision making) minimize the number of times an objective function is evaluated. Optimization techniques, in aspect of decision making, are applied for selecting the most preferred design options from the set of alternatives without evaluating all possible alternatives in details. As a result, optimization techniques increase the speed of design by automation.

As mentions above, decision making has three main elements: options identification; expectation determination of each option and finally expression of values. The optimization problems, which are maximization or minimization of the objective function while all constraints are satisfied, can be modeled as decision making tool. In this context, the option space can be modeled as a set of possible values of x in the feasible area; the expectation is modeled as F(x) and the preference is modeled by maximization or minimization. In this process, optimizer aims to maximize the expected VN-M utility of the profit or net revenue. This figure shows the basic architecture of collaborative optimization developed by Barun et al..[25]

Risk and Uncertainty Management[edit]

(Farzaneh Farhangmehr)

Risk and Uncertainty[edit]

The main duty of design teams during the design process and development of engineering systems is to make optimal decisions in uncertain environments. Their decisions should satisfy limitations due to constraints associated with systems. One of these limitations is risk that might lead to failure or suboptimal performance of systems. On the other hand, uncertainties associated with decisions have significant effects on critical factors and assumptions underlying each decision and having no plan for managing these uncertainties increase costs of design and decision making by changing resources (market, time, etc.).

According to concerns of diverse fields, including design, engineering analysis, policy making, etc., there are several definitions for the term of “uncertainty, such as: “a characteristic of a stochastic process that describes the dispersion of its outcome over a certain domain”[26] or “The lack of certainty, A state of having limited knowledge where it is impossible to exactly describe existing state or future outcome, more than one possible outcome” .[27]“ and so risk is defined as 'a state of uncertainty where some possible outcomes have an undesired effect of significant loss'.[27]

Risk management[edit]

In literature generally refers to the act or practice of controlling risk.[28] Based on this definition, risk management includes the processes of planning, identification, analysis, mitigating and monitoring of risks ”.[29] The International Organization for Standardization identifies the following principles of risk management:[30][31]

- Risk management should create value.

- Risk management should be an integral part of organizational processes.

- Risk management should be part of decision making.

- Risk management should explicitly address uncertainty.

- Risk management should be systematic and structured.

- Risk management should be based on the best available information.

- Risk management should be tailored.

- Risk management should take into account human factors.

- Risk management should be transparent and inclusive.

- Risk management should be dynamic, iterative and responsive to change.

- Risk management should be capable of continual improvement and enhancement.

According to the standard ISO/DIS 31000 'Risk management -- Principles and guidelines on implementation',[30] the process of risk management consists of several steps as follows:[31]

- Identification of risk in a selected domain of interest

- Planning the remainder of the process.

- Mapping out the following:

- the social scope of risk management

- the identity and objectives of stakeholders

- the basis upon which risks will be evaluated, constraints.

- Defining a framework for the activity and an agenda for identification.

- Developing an analysis of risks involved in the process.

- Mitigation of risks using available technological, human and organizational resources.

-Risk planning:

The first step of risk management is providing the design team with the list of possible risk identification methods, assessment techniques and mitigation tools and then allocating resources to them by balancing resources (cost, time, etc.) against their value to the project.

- Risk identification:The taxonomy applied in NASA Systems Engineering Handbook Management Issues in Systems Engineering [37] categorizes risks into organizational, management, acquisition, supportability, political, and programmatic risks. Applying previously examined risk templates, conducting expert interviews, reviewing the similar lessons learned documents of similar projects, analyzing the potential failure modes and their propagation by applying FMEA, FMECA, Fault Tree Analysis, etc.

- Risk Mitigation and Monitoring:

- Do nothing and accept the risk: Future risk information gathering and assessments

- Share the risk with a co-participant: Share it with an international partner or contractor (i.e. Incentive contracts, warranties, etc.)

- Take preventive action to reduce the risk: Additional planning (i.e. additional testing subsystems and systems, designing in redundancy, off-the shelf hardware, etc.)

- Plan for contingent action: Additional planning

Uncertainty Management[edit]

The evaluation of sources, magnitude, and mitigation of risks associated with systems has become a concern of designers during the design process and life cycle of complex systems. They should understand attitudes toward risks; know where more information is needed, and possible consequences of inevitable decisions should be made during the design process. However; inevitable uncertainties associated with systems change critical factors and assumptions underlying these decisions and might either increase costs of design or explore new opportunities.

Uncertainty Classification [32][edit]

Uncertainty can be due to lack of knowledge (refers to Epistemic or Knowledge uncertainty) or due to randomness in nature(refers to Aleatory, Variability Random or Stochastic uncertainty). One source of uncertainty, ambiguity uncertainty [6-8], results from incomplete or unclear definitions, faulty expressions or poor communication. Model uncertainty includes uncertainties associated with using a process model or a mathematical model. Model uncertainty might be a result of mathematical errors, programming errors, and statistical uncertainty. Mathematical errors include approximation errors and numerical errors, where approximation errors are due to deficiencies in models for physical processes and numerical errors result from finite precision arithmetic [9]. Programming errors are errors caused by hardware/software [10-13], such as bugs in software/hardware, errors in codes, inaccurate applied algorithms, etc. Finally, statistical uncertainty comes from extrapolating data to select a statistical model or provide more extreme estimates [14]. Uncertainties associated with the behavior of individuals in design teams (designers, engineers, etc.), organizations, and customers are called behavioral uncertainty. Behavioral uncertainty arises from four sources: Human errors, decision uncertainty, volitional uncertainty and dynamic uncertainty. Volitional uncertainty refers to unpredictable decisions of subjects during the stages of design [14]. Human errors [15-16] are uncertainties due to individuals’ mistakes. Decision uncertainty is when decision makers have a set of possible decisions and just one should be selected. The fourth major source of behavioral uncertainty, dynamic uncertainty, is when changes in the organization or individuals’ variables or unanticipated events (e.g., economic or social changes) contribute to a change in design parameters that had been determined initially. Dynamic uncertainty also includes uncertainties resulted from degrees of beliefs where only subjective judgment is possible. Finally, uncertainties associated with the inherent nature of processes are called Natural Randomness. This type of uncertainty is irreducible and decision makers are not be able to control it in the design process.

This figure shows uncertainty classification provided by CACTUS [33]

Uncertainty assessment[32][edit]

Attempts to quantify uncertainty during the design process have been published, but most focus on the quantitative aspects of uncertainty only [17]. These technical methods have to be complemented with qualitative methods, including expert judgments. While there have been attempts to accomplish this in various fields [18-21], methods to incorporate both types of uncertainties in a design process are not addressed. Uncertainty assessment methods generally are divided into four major approaches based on their characteristics in analyzing data and representing the outputs:

A probabilistic approach is based on characterizing the probabilistic behavior of uncertainties in the model including a range of methods to quantify uncertainties in the model output with respect to the random variables of model inputs. These methods allow decision makers to study the impact of uncertainties in design variables on the probabilistic characteristics of the model. Probabilistic behavior may be represented in different ways. One of the basic representations is the estimation of the mean value and standard deviation. Although this representation is the most commonly used result of the probability methods, it cannot provide us with a clear understanding of the probabilistic characteristics of uncertainties. Another representation of probabilistic behavior is the probability density function (PDF) and the cumulative distribution functions (CDF), which provide the data that is necessary for analyzing the probabilistic characteristics of the model. Although the classic statistical assessment approaches clarify the type and level of risk by assessing associated uncertainties, they cannot take past information into account. To address this problem, a Bayesian approach offers a wide range of methodologies based on Bayesian probability theory, assuming the posterior probability of an event is proportional to its prior probability [22-24]. The Bayesian logic can also be used to model degrees of beliefs. The role of a Bayesian model for assessing degrees of beliefs is more important in large-scale multidisciplinary systems. Simulation methods analyze the model by generating random numbers and then observing changes in the output. In other words, a simulation approach is a statistical technique clarifying the uncertainties that should be considered to reach the desirable result. Simulation methods are generally applied when a problem cannot be solved analytically or there is no assumption on probability distributions or correlations of the input variables. The most commonly used simulation-based methodology is the Monte Carlo Simulation (MCS) [25, 26]. MCS includes a large number of repetitions, generally between hundreds and thousands. Simulation methods can be used on their own or in combination with other methods. Methods which incorporate both qualitative and quantitative uncertainties are placed in the fourth category as qualitative approaches. One example is NUSAP [18], which stands for “Numeral, Unit, Spread, Assessment and Pedigree”, where the first three categories are quantitative measures and the two next categories are qualitative quantifiers which might be applied in combination of other assessment methods such as Monte Carlo and sensitivity analysis. Some other methods, such as ACCORD® [31], which are based on the Bayesian theory, can be considered as assessment techniques that combine both types.

Uncertainty mitigating and diagnosing methods[32][edit]

Although being familiar with sources of uncertainty and methodologies for assessing them are critical, one challenge remains: how can we handle and mitigate the effects of these uncertainties in the systems? In addition, how can we diagnose them before it is too late and they get out of control? To answer these questions, let's provide methodologies for uncertainty diagnosis and mitigation:

Uncertainties due to programming errors can be diagnosed by those who have committed them. Since programming errors may occur during input preparation, module design/coding and compilation stages [27], it can be reduced by better communication, software quality assurance methods [28, 29], debugging computer codes and redundant executive protocols. Applying higher precision hardware and software can mitigate the effect of mathematical uncertainties associated with the model due to numerical errors resulting from finite precision arithmetic. In addition it reduces the effect of statistical uncertainties. Statistical uncertainty also can be mitigated by selecting the best data sample in terms of both size and the similarity to the model. Similar to the statistical uncertainty, approximation uncertainty is minimized when the best model with acceptable range of errors and the best assumption for variables, boundaries, etc., is selected. Simulation approaches might be applied to generate the best model. Ambiguity uncertainty is naturally associated with human behavior; however it can be reduced by clear definitions, linguistic conventions or fuzzy sets theory [7], [30]. Volitional Uncertainty which results from unpredictable decisions especially in multidisciplinary design is diagnosed by other organizations or individuals and is mitigated by hiring better contractors, consultants and labor [9, 14]. Although Human errors and individuals’ mistakes are inevitable in the system, they might be diagnosed and mitigated by applying human factors criteria such as inspection, self checking, external checking, etc. When only subjective judgments are possible the effect of dynamic uncertainty can be mitigated by applying Bayesian approach [22-24]. In addition this type of uncertainty can be reduced by applying design optimization methods to minimize the effect of changes in variables or unanticipated events which contribute changes to design parameters. Such as dynamic uncertainty, design optimization is useful for reducing the effect of decision uncertainty when a set of possible decisions are available. Methods based on Bayesian decision theory (such as ACCORD® [31]) also can be used to help decision makers to make more informed choices. Sensitivity analysis [32-33] and robust design [34-35] are also helpful by determining which variables should be controlled to improve the performance of the model.

Product Lifecycle[edit]

Wikipedia gives a good definition of product lifecycle.Product Lifecycle

References[edit]

- ↑Sobek II, Durward K., Allen C. Ward, and Jeffrey K. Liker. 'Toyota's Principles of Set-Based Concurrent Engineering.' Sloan Management Review: 67-83.

- ↑Ullman, D. G., “The Mechanical Design Process,” Third Edition, McGraw-Hill, New York, 2002.

- ↑ abPeterson, C., “Product Innovation for Interdisciplinary Design Under Changing Requirements: Mechanical

- ↑ abFriedenthal, S., A. Moore, R. Steiner, “A practical Guide to SysML: The Systems Modeling Language,” Chapter 3, 2008.

- ↑Vanderperren, Y., W. Dehaene, “UML 2 and SysML: Approach to Deal with Complexity in SoC/NoCDesign,” Proceedings of the Design, Automation and Test in Europe Conference and Exhibition, 2006.

- ↑Sanford Friedenthal, Alan Moore and Rick Steiner, “A practical guide to SysML: The System Modeling Language”, ISBN 978-0123743794.

- ↑6- Mattew Hause, “An overview of the OMG Systems Modeling Language (SysML)”, Embedded Computing Design, August 2007. http://www.embedded-computing.com/pdfs/ARTiSAN.Aug07.pdf

- ↑ abUllman, D., B. Spiegel, “Trade Studies with Uncertain Information,” Sixteenth Annual International Symposium

- ↑ abcD’Ambrosio, B., “Bayesian Methods for Collaborative Decision-Making,” Robust Decisions Inc., Corvallis, OR.

- ↑Gary M. Stump, Timothy W. Simpson, Mike Yukish and John J. O’Hara, “Trade Space Exploration of Satellite Datasets Using a Design by Shopping Paradigm”, 0-7803-8155-6/04/$17.00© 2004 IEEE.

- ↑R. Storn and K. Price, “Differential evolution – a simple and efficient adaptive scheme for global optimization over continuous spaces”. Technical Report TR-95-012, ICSI, 1995.

- ↑R. Balling. “Design by shopping: A new paradigm”, In Proceedings of the Third World Congress of Structural and multidisciplinary Optimization (WCSMO-3), volume 1, pages 295–297, Buffalo, NY, 1999

- ↑Timothy W. Simpson, Parameshwaran S. Iyer, Link Rotherrock, Mary Frecker, Russel R. Barton and Kimberly A. Barrem, “Metamodel-Driven Interfaces for Engineering Design: Impact of delay and problem size on user performance”, American Institute of Aeronautics and Astronautics.

- ↑ abLiang, C., J. Guodong, “Product modeling for multidisciplinary collaborative design,” Int. J. Adv. Manuf. Technol., Vol. 30, 2006, pp. 589-600.

- ↑ abcConroy, G., H. Soltan, “ConSERV, a methodology for managing multi-disciplinary engineering design projects,” International Journal of Project Management, Vol 15, No. 2, 199, pp.121-132.

- ↑Test

- ↑Balling, R. J., and Sobieszczanski-Sobieski, J., 1994, ‘‘Optimization of Coupled Systems: A Critical Overview of Approaches,’’ AIAA-94-4330-CP Proceedings of the 5th AIAA/NASA/USAF/ISSMO Symposium on Multidisciplinary Analysis and Optimization, Panama City, Florida, September.

- ↑138. Kroo, I., Altus, S., Braun, R., Gage, P., Sobieski, I., 1994, ‘‘Multidisciplinary Optimization Methods for Aircraft Preliminary Design,’’ AIAA-96-4018, Proceeding of the 5th AIAA/NASA/USAF/ISSMO Symposium on Multidisciplinary Analysis and Optimization, Panama City, Florida, September

- ↑Tappeta, R. V., and Renaud, J. E., 1997, ‘‘Multiobjective Collaborative Optimizatio “, ASME J. Mech. Des., 119, No. 3, pp. 403–411

- ↑Mohammad Reza Danesh, and Yan Jin, “AND: An agent-based decision network for concurrent design and manufacturing”, Proceedings of the 1999 ASME Design Engineering Technical Conferences, Sep 12-15, 1999, Las Vegas, Nevada

- ↑Zhijun Rong, Peigen Li, Xinyu Shao, Zhijun Rong and Kuisheng Chen, “Group Decision-based Collaborative Design,” Proceedings of the 6th World Congress on Intelligent Control, and Automation, June 21–23, 2006, Dalian, China.

- ↑Xiaoyu.Gu , “Decision-Based Collaborative Optimization” journal of Mechanical design Vol 124, No1 PP 1-13, March 2002

- ↑X. Gu and J.E. Renaud, “decision-based collaborative optimization”, 8th ASCE Specialty conference on Probabilistic Mechanics and structural Reliability, PMC2000-217

- ↑Henk Jan Wassenaar and Wei Chen, “An Approach to Decision-Based Design With Discrete Choice Analysis for Demand Modeling,” Journal of Mechanical Design, SEPTEMBER 2003, Vol. 125

- ↑140. Braun, R. D., Kroo, I. M., and Moore, A. A., 1996, ‘‘Use of the Collaborative Optimization Architecture for Launch Vehicle Design,’’ AIAA-94-4325-CP, Proceedings of the 6th AIAA/NASA/USAF/ISSMO Symposium on Multidisciplinary Analysis and Optimization, Bellevue, WA, September

- ↑Tumer Y, I., et al. An Information-Exchange Tool for Capturing and Communicating Decisions during Early-Phase Design and Concept Evaluation. in ASME International Mechanical Engineering Congress and Exposition. 2005. Orlando, FL: ASME

- ↑ abDouglas Hubbard, How to Measure Anything: Finding the Value of Intangibles in Business, John Wiley & Sons, 2007

- ↑AFMCP 63-101, Acquisition Risk Management Guide, USAF Material Command, Sept. 1993USAF Material Command, Sept. 1993.

- ↑NASA Systems Engineering Handbook. SP-610S, June 1995

- ↑ abTemplate:Cite articleInvalid

<ref>tag; name 'iso3100' defined multiple times with different content - ↑ abhttp://en.wikipedia.org/wiki/Risk_management

- ↑ abcFarhangmehr, F., Tumer Y. I., “Optimal Risk-Based Integrated DEsign (ORBID) for multidisciplinary complex system”, Submitted to ICED09

- ↑Farhangmehr, F. and Tumer, I. Y., Capturing, Assessing and Communication Tool for Uncertainty Simulation (CACTUS), Proceedings of ASME International Mechanical Engineering Congress & Exposition (IMECE 2008), Boston, MA